Rust supported parenting

jcbellido June 18, 2021 [Code] #LillaOst #RustWhere we use Rust, PostgreSQL, mobile phones and a RaspberryPi3 to keep track our kid's events and we officially became data-driven parents.

The last 18 months have been quite intense for us. Beyond da warus, the transition to full time WFH, changing jobs, we had a kid and we moved to a new apartment. When our kid was a newborn we realized how useful it was to keep track of feedings and nappy changes in a centralized manner. Taking advantage of many sleepless nights I went Rust full stack and I wrote a web-app to support us. And honoring an internal joke we call it:

LillaOst: a web app written in Rust to track your kid's daily events.

Introducing LillaOst

LillaOst is an event tracker specialized in newborn and very young babies data. Our goal was to track 2 main events:

- Feedings: source and amounts in ml.

- Nappy changes: and have an idea of the payload delivered.

And, paramount to me, it had to be completely private.

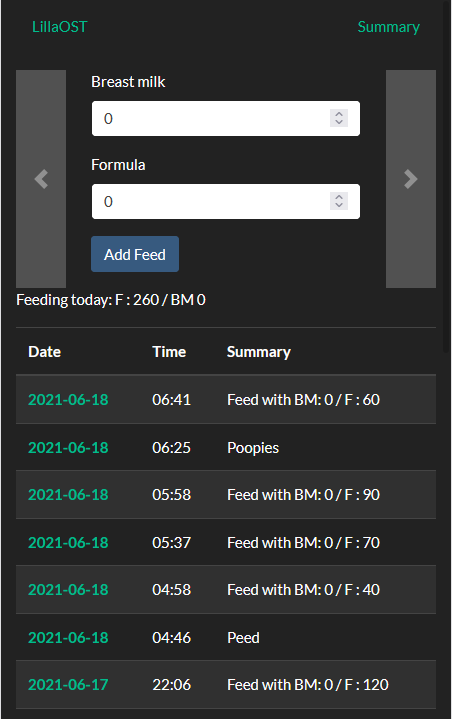

Our UX was very simply defined as: we need to use this app in the middle of the night in pitch dark rooms, with a single hand if possible. When we reached this version:

it was clear that we were up to something usable.

Summary views: WebAssembly

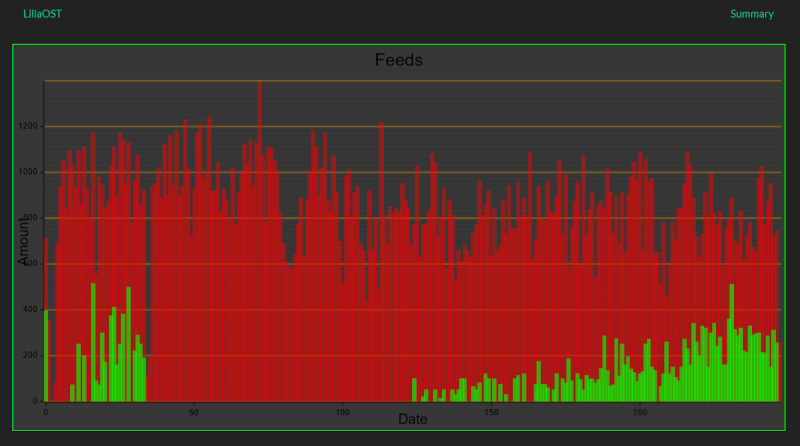

After some days of data input we wanted to have an overview on daily feedings. Trends on daily food intakes usually mean that the kid is going through something, perhaps teeth are coming, maybe a small illness or other disturbances. After some searching I found about plotters

data visualization is a complex field but that graph contains an apartment move and the first 2 teeth.

Using rust in web

When I started this project I knew very little rust. I was barely at the "What's this fuss about Rust? Is this real?" state of doing some basic tutorials and watching a couple videos. It was after checking the official documentation that I got impressed:

- The rust programming language Is a great introduction manual, perhaps comparable to classics such as K&R.

- Rust by example Is the perfect companion for the language manual. And for those more oriented to "learn by doing" maybe even better than the manual.

But it was the "Are we XYZ?" page what caught my eye: Are we web yet?. Is the listing on this page combined with Reddit's rust community what finally sent me down this path.

Note: At the bottom of this article in the .Net Core on a RaspberryPi3B section you have a sneak peek on other options I considered before committing to Rust.

Following the main components of LillaOst.

Diesel

Much to some of my colleagues dismay I like ORMs and I wanted to know more about Rust's options in that space. That's where Diesel comes in. My plan was to abstract myself as much as possible from plain SQL. I wanted to traffic structs back and forth.

Diesel is a big and complete library and can be complex sometimes. Approaching it was surprisingly simple the documentation was, again, excellent. In particular the getting started with diesel, section was a breeze to go through.

As an example let me share the backend code that renders the Overview (screen capture above). In the following code

we're fetching data from 2 different tables Feed and Event and we're combining the results in an easy to digest-format for the UI.

Handlebars

LillaOst is doing a lot of server side page rendering. It's something that "sorta happened". Essentially I'm using a collection of Handlebars templates to generate easy to parse HTML, as an example:

Date

Time

Summary

{{#each overview_entries}}

{{#if (eq this.overview_type "feed")}}

{{this.date}}

{{else}}

{{this.date}}

{{/if}}

{{this.time}}

{{this.summary}}

{{/each}}

that's the code responsible for rendering the summary page.

Actix

When looking for alternatives on how to serve web and deploy endpoints I found at least two viable alternatives:

Checking the github activity, in particular in the examples sections, I finally opted for actix. I'm sure both of them are perfectly capable to serve a site as simple as LO.

I decided to go full server side page generation (see the Handlebars section for more details). As an example, the code that returns the main page, the image at the begining of this article, looks like this:

use PgConnection;

use ;

type DbPool = Pool;

pub async

where we have a round to the DB that is then handlebar-ed into HTML. In my production code I have a bunch of trace! sprinkled around but this is it.

An interesting detail that flew completely over me as a rust noob was the async keyword. I was completely used to it coming from C# and I didn't thought about it twice. It was weeks later when I started to hear about:

Sharing LillaOST: Yew + LocalStorage

LillaOst has been a great help for my little family. It helped us to better understand our kid's needs and, from time to time, even predict what was going to happen in the next hour or so.

I imagine that every parent develops a system: from notebooks to phone notes to full cloud-supported solutions. But I keep surprising myself on how pedestrian most of these solutions are. And I'm convinced that having the option of using our solution they'll have easier times.

But how to share it? How to go wide? As it is today LillaOst has too many moving parts:

- a WiFi LAN

- an always-on computer

- git

- rust pipeline

- PostgreSQL + diesel setup

It's not difficult to deploy but the setup has too many steps to be considered accessible.

As I see it my next step is to go full web based. The plan is to publish a web app, that keeps all its data in a single device. That seems a good initial compromise between privacy and ease of deploy.

The route ahead is rust quite probably with Yew + localStorage (or comparable).

Foot note: .Net Core on a RaspberryPi3B

When I started working on LillaOst I was immersed in the .Net CORE ecosystem and the only computer I had permanently connected in my place was a RaspberryPi3B. And that was my initial intuition: ASP .Net CORE. After some configuration it was possible to execute dotnet commands and I was able to compile and run ASP con an RP3B. And that was cool. I was literally blown away. I was compiling to an ARM processor!

I was able to start a Hello World! example in ~90 seconds. The memory was almost completely consumed.

The full actix stack is serving pages in less than 2 seconds. The compilation times are absolutely ridiculous, in the range of ~20 mins. The total memory footprint for the web server was under 32MB.

It was clear that only a compiled to the metal solution was going to cut it. My "server" was simply too weak to move anything else.